Cache coherence cortex a9 code#

For example, imagine that code reads an array byte-by-byte and does some simple processing on each byte. For example, Ethernet DMA descriptors with a size of 32 B or 16 B perform better, when put in non-cacheable memory, but Rx data buffers with a typical required size of 1522 B gain from D-cache tremendously.Īlso it's a misconception that cache improves performance only for repetitive accesses. But, on a buffers larger than the cache line size and especially in a zero-copy solutions, the cache will have a major positive impact on a performance - much larger than a loss because of an SCB_***() calls. In such scenarios indeed disabling the cache on those buffers could be a better choice. Second, on Rx side the buffers will still have to fill the whole cache line and therefore waste relatively (to the actually used part of buffer) large amounts of memory. First, the CPU time spent on SCB_***() calls could be more than the gain from using cache.

Cache coherence cortex a9 series#

Unfortunately for Cortex-M processors ARM doesn't provide a clear explanation or example, but they do provide a short explanation for Cortex-A and Cortex-R series processors.įor a buffers significantly smaller than the cache line size (32 B on Cortex-M7), cache maintenance can be inefficient because of two factors. In this example the 67 is aligned to a multiple of 32 and respectively the buffer size is set to 96 bytes. And the ALIGN_BASE2_CEIL() is a custom macro, which aligns an arbitrary number to the nearest upper multiple of a base-2 number. The _ALIGNED() is a CMSIS defined macro for aligning the address of a variable. CMSIS defined constant for data cache line size is _SCB_DCACHE_LINE_SIZE and it is 32 bytes for Cortex-M7 processor.

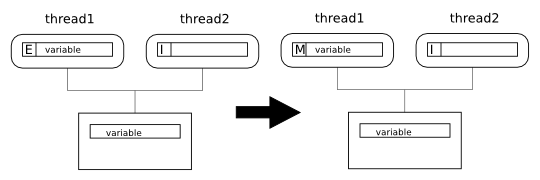

For that to be the case, the buffer address and size must be aligned to the size of cache line.

To ensure that it does not happen, the buffer has to exactly fill an integer number of cache lines. Therefore cache invalidation for Rx buffers must be done before and after DMA transfer and skipping any of these will lead to Rx buffer corruption.ĭoing cache invalidation on arbitrary buffer can corrupt an adjacent memory before and after the particular buffer. The second cache invalidation at line 11 after the DMA transfer ensures that the cache lines, which during the DMA transfer could be read from memory by speculative reads, are discarded. The first cache invalidation at line 6 before the DMA transfer ensures that during the DMA transfer the cache has no dirty lines associated to the buffer, which could be written back to memory by cache eviction. ProcessReceivedData(abBuffer, nbReceived) SCB_InvalidateDCache_by_Addr(abBuffer, nbReceived) Size_t nbReceived = DMA_RxGetReceivedDataSize() Later, when the DMA has completed the transfer.

SCB_InvalidateDCache_by_Addr(abBuffer, sizeof(abBuffer)) Prepare and start the DMA Rx transfer. Uint8_t abBuffer _ALIGNED(_SCB_DCACHE_LINE_SIZE) Prepare and start the DMA Tx transfer.įor Rx (from peripheral to memory) transfers the maintenance is a bit more complex: #define ALIGN_BASE2_CEIL(nSize, nAlign) ( ((nSize) + ((nAlign) - 1)) & ~((nAlign) - 1) ) Also there is another discussion, where a real-life example of cache eviction was detected.įor Tx (from memory to peripheral) transfers the maintenance is rather simple: // Application code. This topic is inspired by discussions in ST forum and ARM forum, where a proper cache maintenance was sorted out and an example of a real-life speculative read was detected.

0 kommentar(er)

0 kommentar(er)